In this “addendum” section, I want to discuss Examples (d) and (e) in Chapter V Section 2. I also want to add a couple of examples and problems.

The problems in this lecture are important, so I would like you to write them up and submit them (as you do the assignment problems).

These problems have to do with when the probability of something is itself unknown, with some probabilities. These problems also relate to what probability means: probability does not only say something about a system, but also something about our state of knowledge of a system.

Insurance risk

![xkcd comic. [lightning flashes]

"Whoa! We should get inside!"

"It's okay! Lightning only kills about 45 Americans a year, so the chances of dying are only one in 7,000,000. Let's go on!"

Caption: "The annual death rate among people who know that statistic is one in six."](https://mcintyre.bennington.edu/wp-content/uploads/2020/08/xkcd-conditional_risk.png)

One class of problems with unknown probabilities comes up often in calculating risks for insurance problems. It comes up in many other places as well. I’ll try to illustrate it with an example/problem:

Problem 1: Suppose that we are running an insurance company, that insures people against wolf attacks. Suppose that people fall into two types: the normal type of person has a risk of wolf attack of 6% per year (probability 0.06). The other type of person is especially tasty to wolves, and has a risk of wolf attack of 60% per year (probability 0.60).

These risks are constant: each year, a normal person has the same 6% chance of wolf attack.

The population is made up of 5/6 normal people, and 1/6 especially tasty people.

a) Find the probability that a randomly selected person will be attacked by wolves this year.

b) Suppose someone has been attacked once already. If we know that about the person, what is the probability that they will be attacked again? How does that compare with the probability in (a)?

This reasoning is used by insurance companies to justify raising your rates if you have an accident. The reasoning is sound, but it is sometimes said that “being attacked by wolves makes you more likely to be attacked again”. This is NOT true in our model. We are assuming that normal people have a constant rate of wolf attacks, and tasty people have a constant rate of wolf attacks. That rate did NOT change for the person when they got attacked by a wolf.

What DID change is our state of knowledge about the person. Before the wolf attack, we would have guessed that they were tasty with 1/6 probability. After the wolf attack, we would up the likelihood that the person was of the tasty type. The probability is not only a statement about the person; it is a statement about our state of knowledge about the person.

This is what I meant when I said that the probabilities are unknown. We are either dealing with a 0.06 probability of wolf attacks, or a 0.60 probability, but we don’t know which.

Problem 1 continued:

c) Suppose someone was attacked by wolves this year. Find the probability that they were one of the tasty people.

d) Suppose someone was attacked by wolves two years in a row. Find the probability that they were one of the tasty people.

e) Suppose that someone was attacked by wolves n years in a row. Find the probability that they were one of the tasty people.

Now, read and work through Example (d) in Chapter V Section 2, pages 121–123.

In class, Quang brought up an important point: we are assuming that being attacked in year 2 is independent from being attacked in year 1, for both non-tasty people and tasty people individually. But that does NOT mean that being attacked in year 2 is independent from being attacked in year 1 for the whole population!

Problem 1 continued:

f) Show that being attacked in year 2 is NOT independent from being attacked in year 1. (You have already calculated $P(A_1)$, the overall probability that someone in the whole population is attacked in year 1. Calculate $P(A_2A_1)$ and $P(A_2)$, and check that $P(A_2A_1)\neq P(A_2)\cdot P(A_1)$. )

Unknown risks

There is another variant of the previous problem that is often important.

Problem 2: Suppose that a company is selling wolf repellent. We want to determine if it works. Unfortunately, for ethical and practical reasons, we can’t experiment and directly allow people with or without the repellent to be attacked by wolves. So the information we have is from forest ranger reports: we know how many people are attacked by wolves, and of those people, how many were wearing the wolf repellent. What we would like to know is, what is the relative change in probability of wolf attacks without repellent versus with repellent?

Write the thing that we want, and the things we know, as conditional probabilities. Find a formula for the thing we want in terms of the thing we know. Do we need other information? If so, what? Can you express the final result as a simple rule? Try making up some numbers to see how it works.

False positives and false negatives

There is a closely related problem of false positives and false negatives for a test (for example, a medical test).

For example, suppose that some amount of the population are werewolves. Let $W$ be the event that a person is a werewolf. For this example, I will assume that the probability $P(W)$, that a randomly selected person is a werewolf, is a known number. Then $W’$ is the event that a person is not a werewolf.

We are administering werewolf tests. Let $N$ be the event that the test comes back negative, and let $P=N’$ be the event that the test comes back positive.

Now, the test is not perfect. There is some chance that a person who is a werewolf nevertheless tests negative on the test; this is called a false negative. There is also a chance that a person who is not a werewolf nevertheless tests positive on the test; this is called a false positive. I will assume that the probabilities of false negatives and false positives are both known.

A surprising fact is that even a test which is in principle very good (low false positive and false negative probabilities) can actually give very poor results.

Problem 3:

a) Write the probability of a false negative as a conditional probability. Do the same for a false positive.

b) Suppose someone tests positive for being a werewolf. What is the probability that they are in fact a werewolf? Write this as a conditional probability, and then find a formula for it, expressed only in terms of things that we have assumed to be known.

c) Suppose that the probabilities of false negatives and false positives are both 1% (so the test is quite accurate). Suppose that 1% of the population are actually werewolves. Find the numerical probability that, if someone tests positive for being a werewolf, they actually are a werewolf.

Do you see the problem? If 1% of the population are werewolves, the test will identify nearly all of them correctly. But it will also identify 1% of the remaining 99% of people who are not werewolves as werewolves, which will also give another roughly 1% of the population testing positive. That means about a 50% chance that a person testing positive really is a werewolf, even though the test has an impressive-looking 99% accuracy.

This is an unnecessary harm problem: a person testing positive may be subjected to unpleasant werewolf cures or restrictions unnecessarily. The opposite problem can also happen.

Problem 3 continued:

d) Find a formula for the probability that a person who tests negative is in fact not a werewolf, expressed in terms of things we know.

e) Suppose that the false negative and false positive rates are both 1%, and suppose that 50% of people are werewolves. Given these numbers, if a person tests negative, what is the numerical probability that they are, in fact, not a werewolf?

f) Suppose that a test is pretty good, say the false negative and false positive rates are both low. Under what conditions on the population will false positives still be a problem? When will they not be a problem? And same question for false negatives.

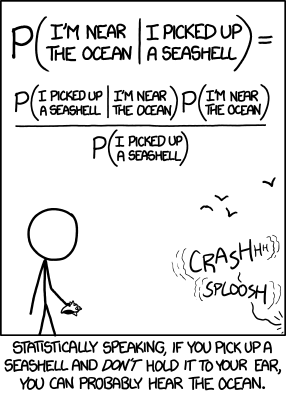

![xkcd comic.

Did the Sun just explode?

(It's night, so we're not sure.)

"This neutrino detector measures whether the sun has gone nova."

"Then, it rolls two dice. If they both come up six, it lies to us. Otherwise, it tells the truth."

"Let's try. Detector! has the Sun gone nova?"

"[roll] YES"

Frequentist statistician:

"The probability of this result happening by chance is 1/36=.027. Since p<0.05, I conclude that the sun has exploded."

Bayesian statistician:

"Bet you $50 it hasn't."](https://mcintyre.bennington.edu/wp-content/uploads/2020/08/xkcd-frequentists_vs_bayesians.png)

Totally unknown probabilities; Laplace’s example

This chapter is already getting long, so you should treat this last section as optional. It’s interesting, but only do it if you have time.

In our insurance example, we didn’t know whether the probability of wolf attack was 0.06 or 0.60. We found the probabilities of one or the other, based on our knowledge of how many wolf attacks occurred.

In that example, there were only two possibilities for the unknown probability, either 0.06 or 0.60. But what if we know nothing about the probability, if it could be between any number between o and 1? How could we model that?

To be more specific: let’s say that we are doing repeated trials (like with a die or a coin). There is some probability p of success each trial, but we don’t know what p is. If we have some information about a run of successes or failures, can make some statement about what we think p is?

For example, suppose we have a weird “coin” which we do not know to be fair. There is some probability p of heads on each flip, but we do not know p=1/2. We do assume that p stays fixed: it is the same for each successive flip. Now, if we flip this coin 30 times and it comes up heads every time, we would strongly suspect that p is not 1/2 for heads for this coin. We would suspect that p is closer to 1. And we would guess a probability of more than 1/2 for the 31st flip to be heads as well.

The simplest assumption about an unknown p is that it is anywhere from 0 to 1. But that gets into continuous variation, which is hard. So let’s make it discrete.

We could make it an urn problem. Let’s say we have an urn with 100 balls, either black or red, but we don’t know how many black and red. We make draws with simple replacement: we pull out a ball and put it back, so the probability remains constant. Suppose we get a long run of red balls: what can we infer about probability? More specifically, if we get a run of n red balls, what would be the probability that the (n+1) st ball is also red?

Since we don’t know how many red balls there are, we can make this more concrete by imagining 101 urns. In the first urn there are no red balls; in the second urn, 1 red ball; up to the 101st urn, which has 100 red balls. We select an urn at random (all with equal probability), and then we do repeated drawings (once we choose an urn, we stick with it for the rest of the experiment).

Rather than 100 balls in each urn, I could have said N balls in each urn. Then taking the limit as N gets large (“as N goes to infinity”), we could try to get back to the continuous range of possibilities for p that we started with.

This is the setup of Laplace’s example. The result he found is that, if the N is very large, and you draw n red balls in succession, then the probability of the (n+1)st ball being red is $$\frac{n+1}{n+2}.$$So in our example of a coin which we don’t know is fair (we don’t know p), if it came up with a run of 30 heads, and we assume that p is equally likely to be anything, then we would expect a 31st head with a probability of 31/32=0.96875.

Again, as in the previous examples, this probability doesn’t represent anything about the coin: the coin has some definite p which doesn’t change from one flip to the next. The probability is a statement about our knowledge of the coin.

Laplace set up this example in part to talk about induction, in the philosophical sense of the word.

All this discussion has been to set you up to read Example (e), Chapter V, Section 2, pages 123–125. Read that section, and try to verify all the claims that the author makes.

(Note that there is a calculus step in (2.9). Here is the idea: the author is trying to evaluate the sum $$1^n+2^n+\dotsb +N^n.$$You can represent this graphically by graphing $y=x^n$; then the terms of the sum are the heights of the graph at $x=1,2,\dotsc,N$. Think of each of those heights as a the height of a rectangle, of width 1, poking up above the graph slightly (draw it!). So the sum is the total area of all those rectangles, which is closely approximated (for large N) by the area under the graph. There are calculus tricks to find this area: that is what the integral is doing. If you know calculus, you should be able to do it; if you don’t, you can take it for granted now, and it can be something to look forward to!)